How to Format JSON Data Ready for Splunk

Author: Laurence Everitt

Release Date: 28/10/2024

Splunk is fantastic at receiving structured data in any format and then making sense of it for output to management and technicians alike, so most Splunk ingesting blogs are in the format, "How do I configure Splunk to work with … files". However, in this case, I have been asked, "hey, our developers want to set up their app logging to use JSON - what is the best JSON log format for easier Splunk searching?"

Splunk can make sense of any structured logs and it does include a special setup for JSON, in that it even has a default JSON sourcetype called "_json" (which you should not use - more on that later), but it is not all plain-sailing and I hope that this blog will help you to get your JSON log ingestion running smoothly and quickly.

A JSON Primer

JSON stands for JavaScript Object Notation and it is commonly used as a method for storing objects in JavaScript (also can be used with other computer languages) and transferring data between client and server. JSON files are made up of plain text, but JSON has its own way of providing a structure for data which is readily extensible and is very easy to read (with little explanation). More details about what JSON is are here. However, the most important things to know about how JSON objects are structured are as follows:

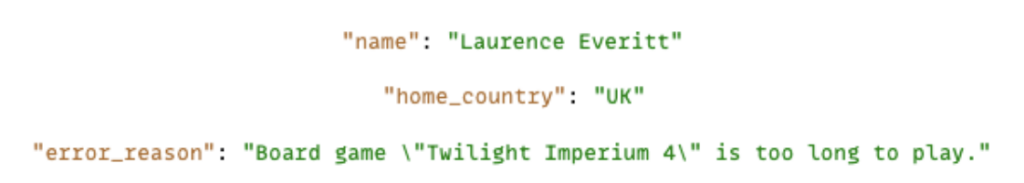

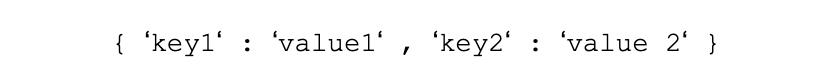

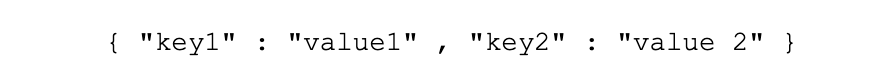

• JSON holds data in a key-value pair format, separated by a colon. The key item of the pair should be surrounded by double-quotes and string values must be surrounded by quotes such as those illustrated below. Also, any strings that include doublequotes inside must have the doublequotes escaped, using a preceding backslash.

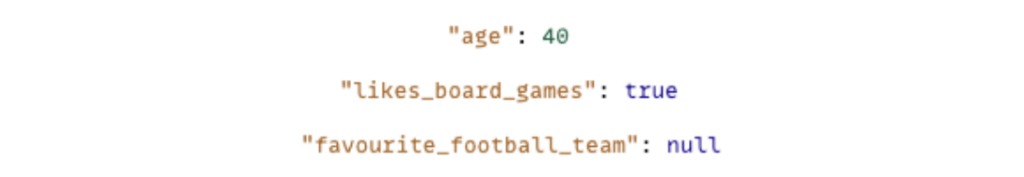

• Having said that, numeric, boolean and null values should not be surrounded by double-quotes, such as below:

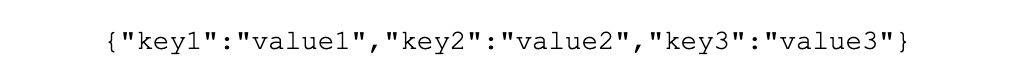

• If we want to group a number of fields together to make an overall object that describes a single entity (such as a "consultant"), then we use curly brackets to surround multiple key-value pairs and we use commas to separate the key-value pairs, such as this:

• If we want to make an array of values, that is possible by using square brackets and adding a name for the array, as below:

• If we want to make an array of objects, then we use the square brackets and commas to separate the items, like this:

More information about JSON and its structures can be found here. I also found using this online JSON validator useful for checking the JSON in this document, so I recommend it to you, too.

JSON Formatting for Splunk

So now you have the basics of how JSON is structured, we can go into more detail about how to structure JSON to work best with Splunk.

Here are some recommendations for structuring your JSON logs so that you get the least friction from Splunk:

• Make sure that each event is in its own JSON Object, i.e. starting and ending with curl brackets. This will make Splunk recognise that the event is JSON automatically and show the WHOLE of the event as JSON.

• Make sure that the log has the same structure (i.e. do not mix JSON with key-value pairs or Syslog in the same sourcetype). This will reduce complexity enormously.

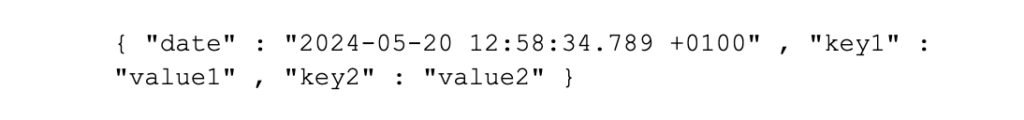

• Add the index date/time field at the beginning (not the middle or end) of the event (i.e. the first key-value pair WITHIN THE JSON object) and NOT outside of the event. This makes it easier for humans and Splunk to find the date. So this is good:

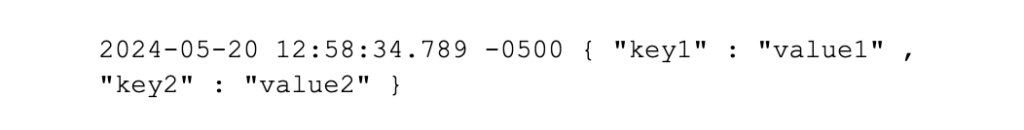

• However, the below is bad, as the time outside of the JSON object will stop Splunk from showing it in the pretty JSON format (as this event is not valid JSON:

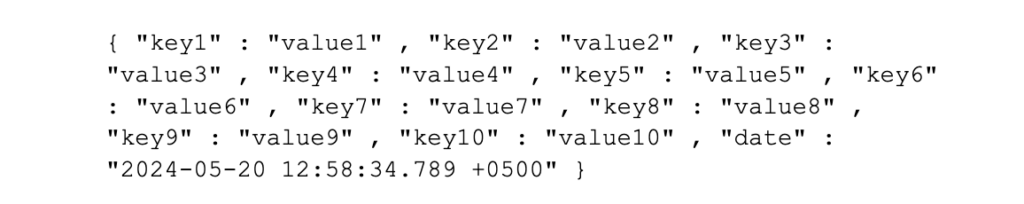

• To make it easier for Splunk (and humans) to find the date, we recommend that you place the date at the beginning of the event. This also makes the definition of the TIME_PREFIX and MAX_TIMESTAMP_LOOKAHEAD easier to configure, so this structure is not ideal:

• NOTE: As a test of this date placement recommendation in regex101, when it searched for the TIME_PREFIX at the start of the event, it took RegEx 13 steps to find the date, but when I moved the TIME_PREFIX to the end of the event, it took between 49 and 116 steps (depending upon complexity of REGEX), making this Aggregation Queue operation relatively expensive, so this optimisation is valid.

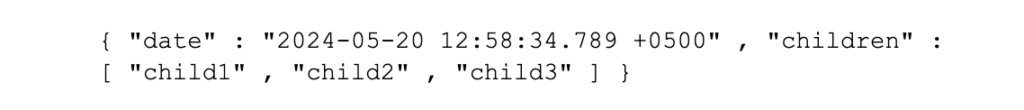

• It is not recommended that your events have duplicate value key names, which can make searching of data difficult and JSON requires that all keys are unique. Instead, for events that contain multiple keys with the same name and purpose, use JSON arrays (as mentioned above), so this is good practice:

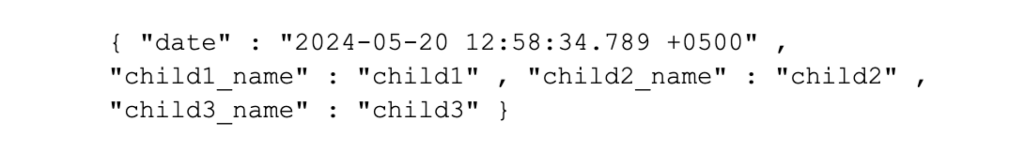

• And the following valid JSON, but not recommended, as it either restricts the number of children or makes searching for the childrens’ names harder in Splunk:

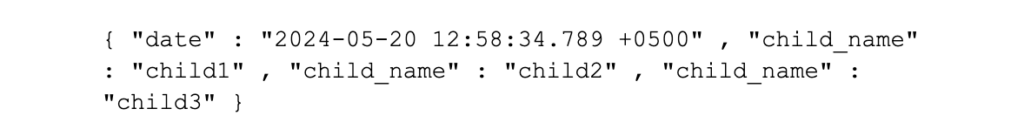

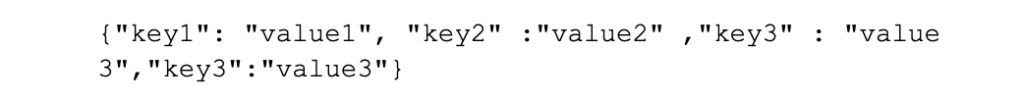

• And the following is not valid JSON, with its duplicate key names:

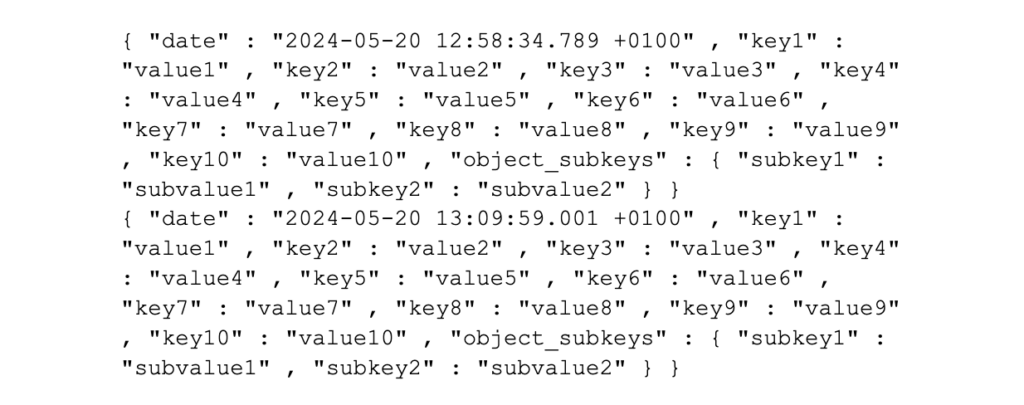

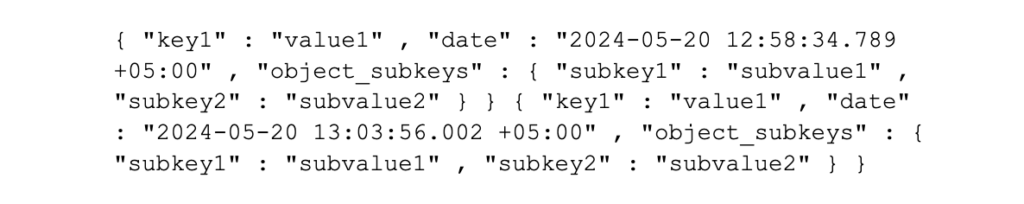

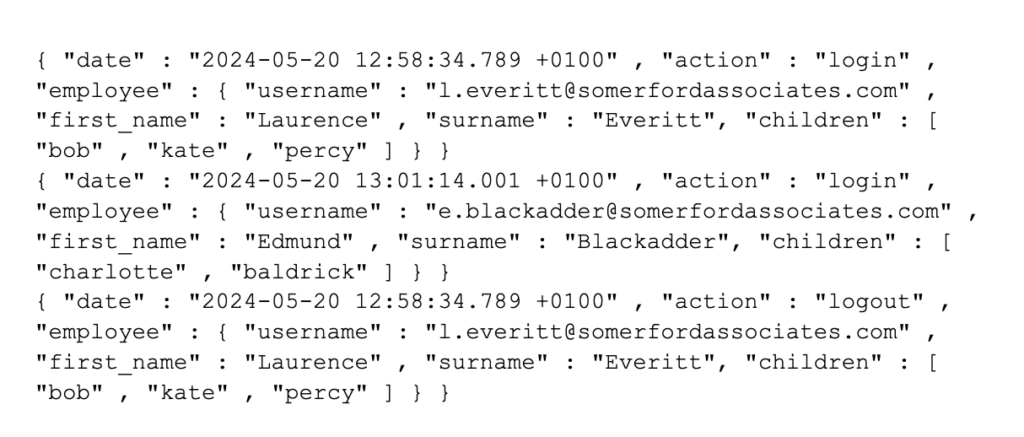

• In most cases, each log event/item of data will be transmitted as a JSON object in itself, so make sure that each event is on a new line (that is a Carriage Return/Line Feed on Windows or Line Feed on MacOS/Linux) and that line breaking is easier for Splunk (however, events can be broken into multiple lines for readability), so the following events are good:

• However, the below is not recommended, as event breaking can be difficult to configure, as creating a RegEx to identify the event break may be complex (and this is especially true if events include nested JSON objects such as this):

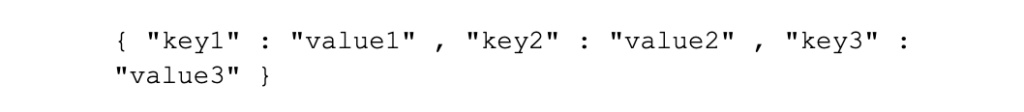

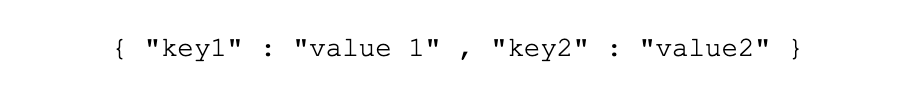

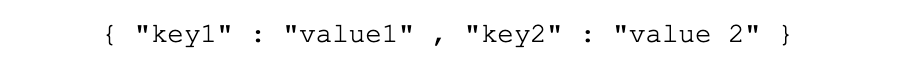

• Be consistent and make sure that the data is consistent in its structure (i.e. all key-value pair character formats have the same layout, such as the following:

• NOTE: During this article, I have used the inter-character spacing for readability sake. Equally valid is the following spacing, which is not as easy to read in the file, but Splunk will still show it nicely when you search for it:

• However, the following, inconsistent, spacings should be avoided:

• Use double-quotes for all values, not just values which contain spaces, as this provides consistency in value format and searching, so this is good:

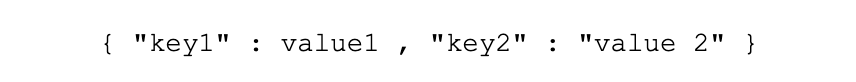

• This JSON object is not valid because strings must be surrounded by double quotes:

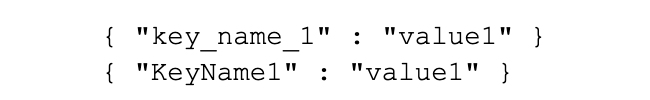

• Where possible, make sure that key names do not include spaces, are the same consistency (either using lowercase with underscores for spaces or camelcase). Both of these are value formats for key names:

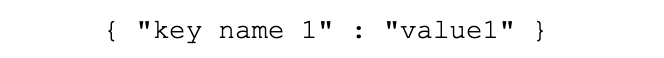

• However, adding spaces into names can make searching in Splunk cumbersome (you will need to add quotes around the field names in the SPL):

• Ensure that ALL events are generated with the same character set, such as UTF-8 or ASCII for this sourcetype.

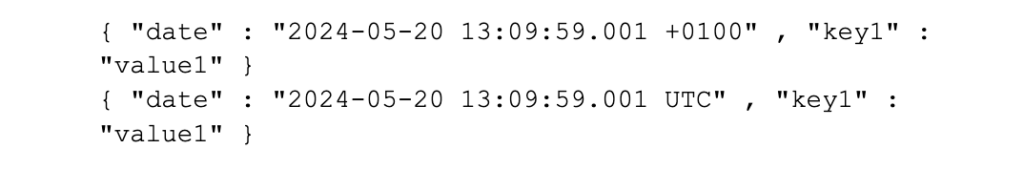

• It is recommended that you add the time zone information to each event (especially if the system is deployed to systems around the world). This makes it easier for Splunk to ascertain when the event occurs around the clock and reduces complexity of administration, so these formats are both valid:

• Use double-quotes for values, not single quotes, so the following is correct:

• Although this does look similar, do not use single-quotes (and is invalid JSON, anyway):

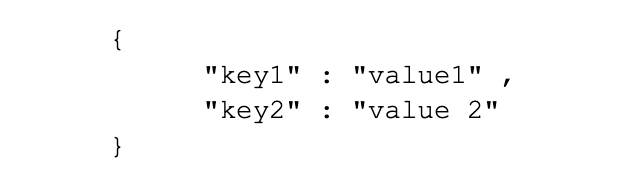

• Avoid indentation into the JSON log file as Splunk will automatically format the event for reading in the GUI and unnecessary indentation increases licence on spaces/tabs and new lines. So this is good:

• However, this is not recommended:

How to Configure Splunk to Read Your JSON Log

First off (as I have already alluded to), do NOT use the "_json" sourcetype (actually, don’t use any of the built-in sourcetypes), use App-configured (i.e. already CIM-compliant) sourcetypes or create your own sourcetypes, as they are much better. This is for three reasons:

1. Firstly, you can add extracted fields to the sourcetype and make them CIM-compliant, if required. For example, if I have two apps that generate JSON log data and one has one field included (such as “errortype”), but the other has a different field included (such as “successful_completion”) and they both are defined as using the _json sourcetype, then the process of finding the field to do an operation on is going to be much more complex than if the logs are differentiated with their custom sourcetypes, as the field extractions (as an example) can be attached to the sourcetypes, rather than the source, etc.

2. Secondly, if you use a custom sourcetype, searching will be easier because your search can then just look for the sourcetype, rather than searching on events with the _json sourcetype and then the source in each search.

3. And lastly, the _json sourcetype definition includes indexed extractions which we want to avoid. In most cases, we do not want to use Indexed Extractions because they can greatly increase the size (possible doubling or tripling?) of the index on the disk because all of the values in the event are kept in the index with the raw data. The only reason why we want to use Indexed Extractions is if the data is constantly being searched using the fields (using the double colon operator “::”, which is inflexible) rather than using the inbuilt fields and regular searches are way too slow.

Worked Example

In this case, I am going to take a typical example JSON input, built around the recommendations above (3 events):

Configuration for props.conf

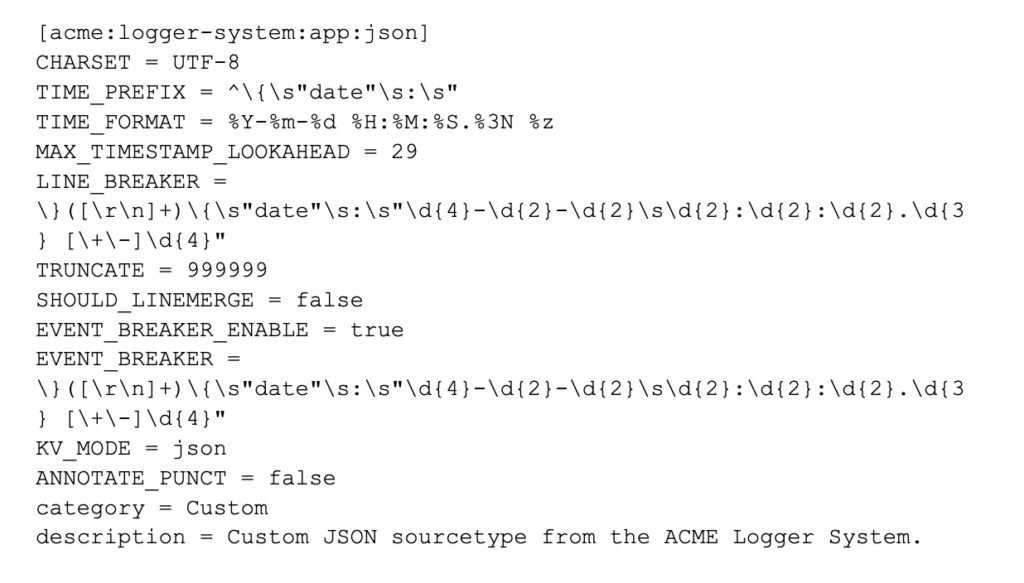

In order to configure Splunk to receive my new events, I need to set up the props.conf to include my new sourcetype. For more information, check out another of my blog entries here or here. For this sourcetype, I would configure the following settings in the props.conf on the first full Splunk instance that received my events:

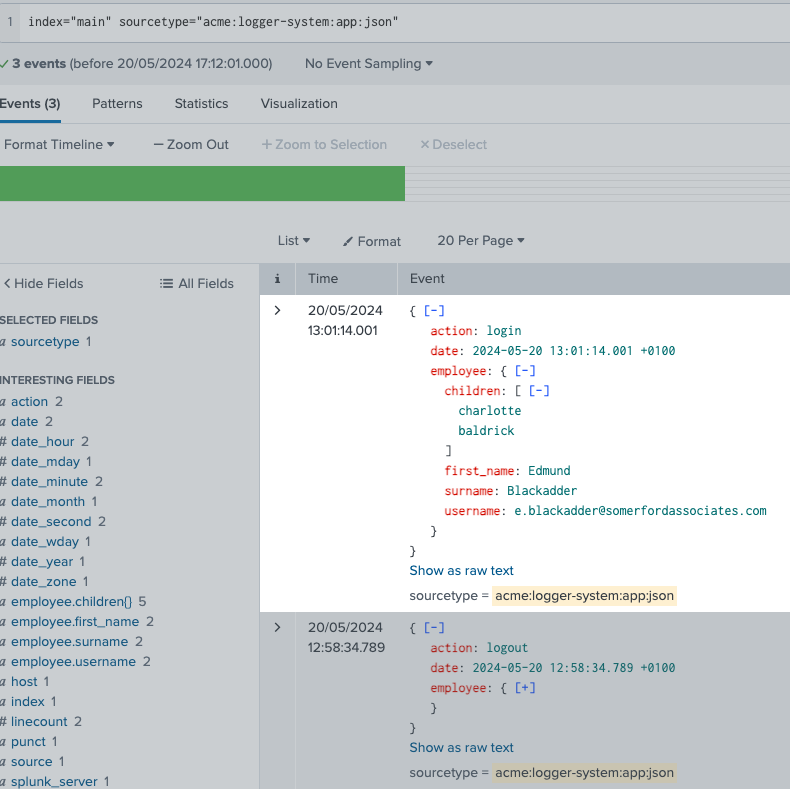

For my Worked Example, I created the props.conf configuration and then used the Add Data functionality of SplunkWeb to import the data and here you can see it!

Because we have set the KV_MODE = JSON (and we are Searching in Smart or Verbose Mode), out-of-the-box, Splunk has already extracted the data that it has found as the following and means that we do not need to do complex field extractions to get the data, unformatted, out of the JSON:

action

date

employee.children()

employee.first_name

employee.surname

employee.username

Useful JSON Tools

Tools that I find useful when working with JSON are as follows:

JSONlint - a website which can be used to check JSON - https://jsonlint.com/

JSON Tools - a website for most JSON operations, including prettifying JSON - https://onlinejsontools.com