How Does Netskope Unlock AI Potential?

Author: Jake Hammacott

Release Date: 11/09/2024

ChatGPT, Copilot and Generative AI

In recent years, generative AI model’s have been used more and more as the benefit of them has been realised by many organisations. Language models, such as ChatGPT, have been used: to create recipes, spelling and grammar checks, and as a sudo-search engine. Generative AI, also, isn’t limited to text-based responses or prompts. DALLE2 uses text based prompts to create unique pieces of visual art in multiple forms, like pictures, logos and banners. Microsoft’s Copilot has been available explicitly to enterprise customers since the end of 2023 and acts as a companion around the 365 suite.

With generative AI being an incredible tool that can assist with many day-to-day tasks, many organisations have seen its use in their end-users work. Employees have used generative AIs to write responses to emails, proof-read documentation and create sales scripts (just to name a few examples). In generative AIs initial exposure to end-users, responses were typically quite basic - with them acting more like chat-bots instead of complicated language models. With time, generative AIs can now not only automatically write and send emails but also personalise messages based on the recipient's profile, position and behaviour to increase rapport and, ultimately, response rates.

The Issues with Generative AI

Unfortunately, as beneficial as generative AIs have been to working life, serious security concerns have arisen along with their popularity. Just because prompting ChatGPT doesn’t involve any other “real person”, it doesn’t mean that there is no risk associated with these communications. For most web-based generative AI’s, as soon as you send a prompt, all of the information sent will be stored and analysed before being responded to. In most cases, this isn’t an issue as your prompt likely includes very generic information.

However, what would happen if:

• An end-user sends all the information required to completely write an email to a customer?

• Does that information contain any personally identifiable information?

• Does that information contain any sensitive information like purchase order details, card details or GDPR protected data?

• How can you know and protect against this information being sent outside of the organisation?

Although an end-user isn’t purposefully exfiltrating sensitive information out of the organisation, once the data has left the safety of the internal network, you can no-longer guarantee its safety and confidentiality.

Additionally, end-user inputs aren’t the only risk associated with generative AIs in industry. Many free and paid generative AIs aren’t ready for use within organisations. Businesses are now finding themselves in difficult situations, where they recognise the benefit of generative AIs but don’t know how to handle, manage and sanction the different web-apps available.

How Netskope Helps safely use Generative AI

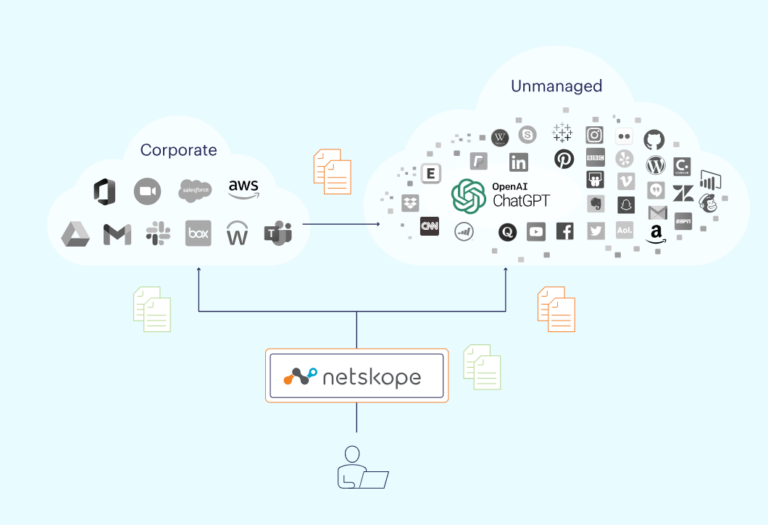

Netskope empowers organisations to have complete control over generative AI usage within their business. Netskope does this through a few different methods:

• DLP rules, which can be used to catch and prevent sensitive data being used in prompts whilst allowing safe prompting of generative AIs.

• Generic policies, allowing for Generative AI to be used as a category, facilitating security engineers to create policies that prevent access to, or even certain actions on, generative AI websites.

• Real time-coaching also aims to teach end-users how to safely navigate different web scenarios, empowering them to become actively vigilant in looking out for potential risks when using the internet.

• Application access control ensures that end-users are unable to perform actions that violate policies regardless of how they access the application. Multiple URLs are compacted into a single application to prevent this.

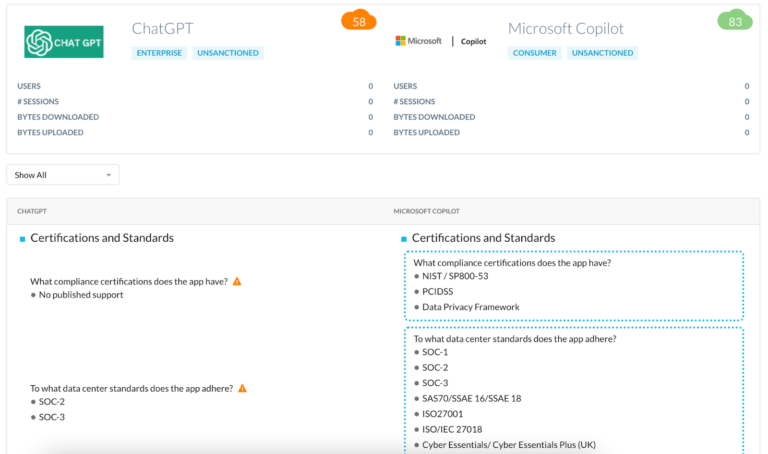

• The CCI index documents a wide range of widely known Generative AI web apps and categorises each of their security findings for industry usage. This allows for an immediate and quick comparison of different generative AIs, highlighting security concerns between each.

Wrapping Up

Ultimately, AI and generative AIs are invaluable tools for individual and enterprise usage so finding ways to safely and securely utilise them is incredibly important before they should be adopted into organisations. Netskope eases the burden of attempting to handle generative AI usage through many different functionalities that have been built into Netskope’s core for years and simply adapted to accommodate these new tools